Google is making significant strides with Gemini, its flagship suite of generative AI models, apps, and services.

So, what exactly is Gemini? How can you leverage its capabilities? And how does it compare to other offerings in the market?

To help you stay abreast of the latest Gemini developments, we’ve compiled this comprehensive guide, which we’ll continuously update as new Gemini models, features, and news about Google’s plans for Gemini are unveiled.

What is Gemini? Gemini represents Google’s highly anticipated next-generation GenAI model family, crafted by Google’s AI research labs, DeepMind, and Google Research. It comes in three variations:

- Gemini Ultra: The most high–performance Gemini model.

- Gemini Pro: A “lite” version of Gemini.

- Gemini Nano: A smaller “distilled” model designed for mobile devices like the Pixel 8 Pro.

All Gemini models are trained to be inherently multimodal, meaning they can seamlessly handle more than just text. They undergo pretraining and fine-tuning across various audio, image, and video datasets, as well as diverse codebases and text in multiple languages.

This multimodal capability sets Gemini apart from models like Google’s LaMDA, which is exclusively text-trained and limited to text-related tasks. Unlike LaMDA, Gemini models can understand and generate content beyond text, including images and videos.

What’s the distinction between Gemini apps and Gemini models?

Initially, Google didn’t explicitly differentiate Gemini from its corresponding apps on the web and mobile platforms (previously known as Bard). The Gemini apps serve as an interface for accessing specific Gemini models, essentially acting as clients for Google’s GenAI. Additionally, Gemini apps and models operate independently of Imagen 2, Google’s text-to-image model available in certain development environments.

What can Gemini do? Due to its multimodal nature, Gemini models theoretically excel at various tasks such as speech transcription, image and video captioning, and artwork generation. While some capabilities are still in development, Google promises a wide array of functionalities in the near future.

Gemini Ultra, for instance, is touted to assist with tasks like physics homework, problem-solving, and scientific paper analysis, leveraging its multimodal prowess. Despite its potential for image generation, this capability is not yet integrated into the productized version of the model.

Gemini Pro boasts enhanced reasoning and understanding abilities compared to LaMDA, as validated by independent studies. The latest iteration, Gemini 1.5 Pro, offers improvements in data processing capacity and can be customized for specific contexts and use cases.

Gemini Nano, a smaller variant of Gemini models, runs efficiently on select mobile devices, enabling features like Smart Reply in Gboard and Magic Compose in Google Messages.

Is Gemini superior to OpenAI’s GPT-4? Google claims superior performance for Gemini models based on benchmark comparisons, asserting that Gemini Ultra surpasses existing benchmarks on a majority of academic metrics. However, early impressions suggest room for improvement, with users noting shortcomings in basic facts, translations, and coding suggestions.

What is the cost of Gemini? Gemini 1.5 Pro is currently free to use within the Gemini apps, AI Studio, and Vertex AI during the preview phase. Once out of preview, usage incurs charges based on character count or image processing.

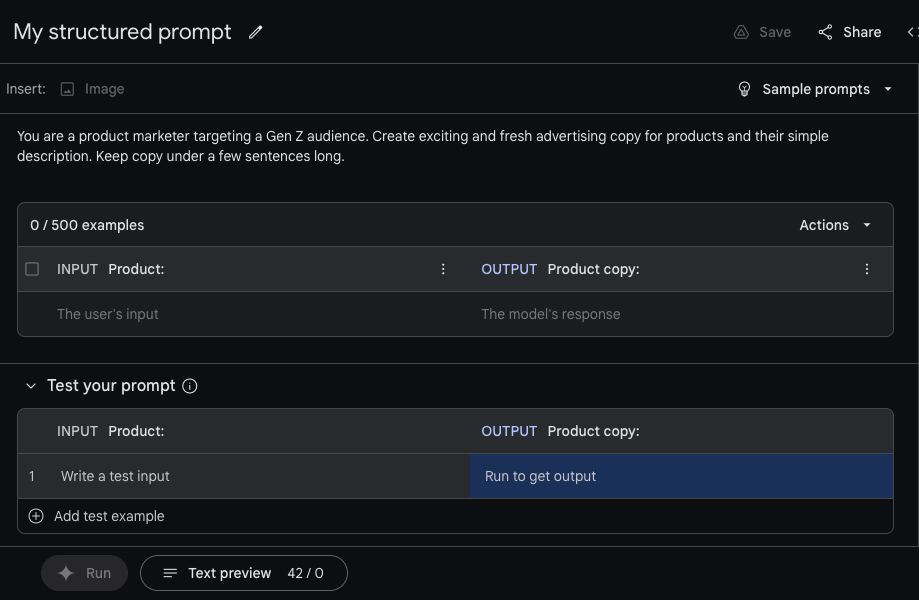

Where can Gemini be accessed? Gemini Pro and Ultra are available in the Gemini apps and accessible via Vertex AI and AI Studio. Gemini Nano is integrated into select mobile devices and will expand to other platforms in the future.

Is Gemini coming to iOS? There are discussions between Apple and Google about incorporating Gemini into future iOS updates, though nothing is confirmed yet, as Apple is also exploring options with OpenAI and developing its own generative AI capabilities.

This post was initially published on February 16, 2024, and has been updated to reflect new information about Gemini and Google’s plans for its development.