Artificial intelligence (AI) is revolutionizing various aspects of human life, including the field of medicine. A notable example of this transformation is the utilization of ChatGPT for diagnosing complex medical conditions that have eluded healthcare professionals.

In a recent case, ChatGPT played a crucial role in diagnosing a complicated medical condition, showcasing both the strengths and limitations of AI in healthcare. This article will delve into this case and explore the advantages and limitations of using ChatGPT for medical diagnoses.

The case revolves around a boy named Alex, as reported by Today.com. Alex had been experiencing chronic pain for three years, during which he visited 17 different doctors without receiving a clear diagnosis. In a last-ditch effort, Alex’s mother, Courtney, turned to ChatGPT for help. By inputting all of his symptoms and MRI results into the platform, ChatGPT suggested a diagnosis of “tethered cord syndrome.” Subsequently, this diagnosis was confirmed by a neurosurgeon.

The implementation of AI in the healthcare field has sparked growing interest and debate. In Alex’s case, ChatGPT proved to be a valuable tool in reaching a diagnosis that had eluded multiple medical professionals. However, like any emerging technology, ChatGPT has its advantages and limitations that require careful consideration.

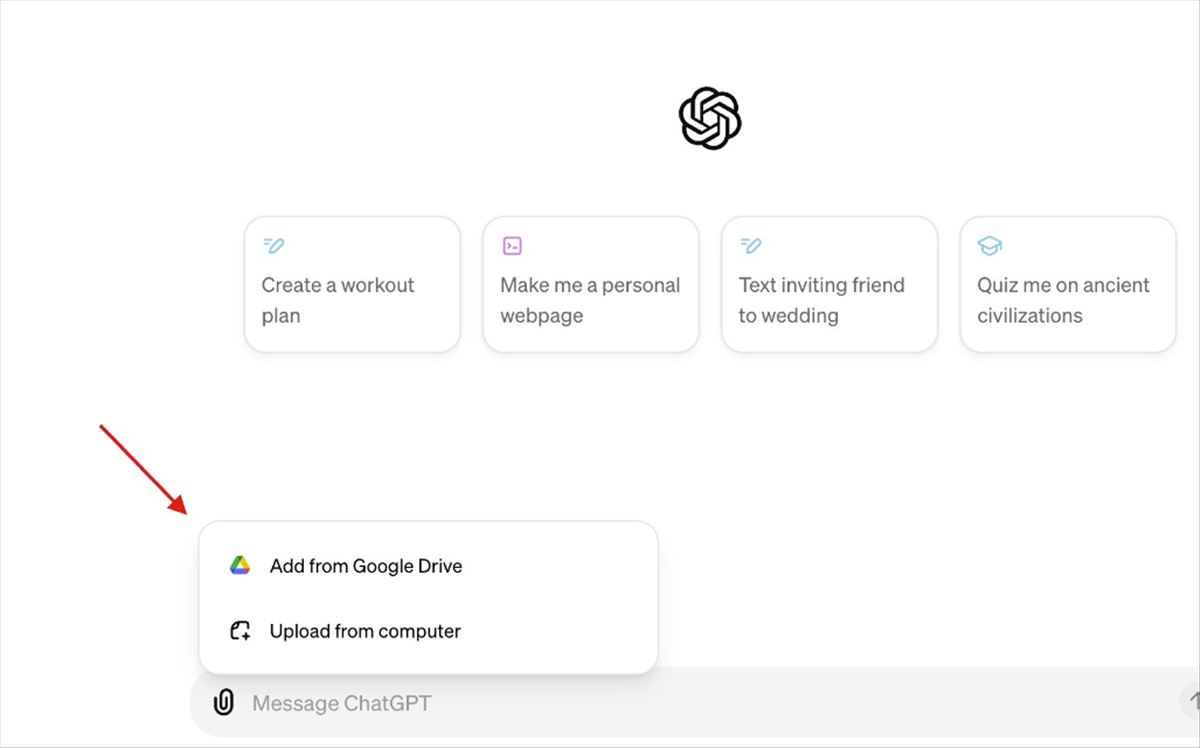

One of ChatGPT’s main advantages is its access to an extensive database comprising medical literature, case studies, and more. Consequently, it can consider a wide range of medical conditions that even a specialized doctor might overlook. Additionally, the platform’s accessibility to anyone with internet access makes it particularly valuable for individuals who lack easy access to specialized medical care or those seeking a second opinion. Furthermore, ChatGPT’s ability to quickly process large amounts of information has the potential to significantly expedite the diagnosis process, particularly in complex cases that necessitate consulting multiple data sources.

However, ChatGPT does have limitations that need to be acknowledged. Despite its information processing capability, it lacks the clinical judgment acquired through years of training and experience in the medical field. It cannot conduct physical examinations or interpret subtle nuances in a patient’s symptoms, which may be crucial for accurate diagnosis. Furthermore, as with any AI technology, there is a risk of errors and “hallucinations.” In the medical context, these errors could have severe consequences, including misdiagnosis. Moreover, the use of AI in medicine raises ethical concerns, such as assigning responsibility in the event of a misdiagnosis and the handling of patient data. These ethical considerations have yet to be fully addressed.

While AI demonstrates great potential, the American Medical Association warns that caution should be exercised due to its limitations and potential risks to patients. Similarly to how doctors are concerned when patients rely on internet resources before a consultation, the use of ChatGPT should also be approached with caution.

In conclusion, the utilization of AI, such as ChatGPT, in the medical field has showcased its ability to assist in complex diagnoses. However, it is crucial to recognize its limitations, including the absence of clinical expertise and the potential for errors. Ethical considerations surrounding AI implementation in healthcare also demand careful attention. As AI continues to evolve, striking a balance between its advantages and limitations will be essential in harnessing its potential for the betterment of healthcare.